Research funders are placing increasing emphasis on ensuring that participants in funded projects not only publish their results but also make the research data underlying those results accessible. Editors of scientific journals are likewise increasingly expecting authors, across more and more disciplines, to not only reference the data cited in their articles but also make those data discoverable and accessible.

In the scientific community, there is growing acceptance of the principle that research data—while respecting relevant legal and ethical regulations and taking researchers' interests into account—should be made as open and accessible as possible. In other words, data management should be "as open as possible, as closed as necessary."

For most research grant applications—if research data will be generated during the project—it is already a requirement to fill out a data management plan template provided by the funder, or to submit a brief description of the planned data management. If the project is funded, a more detailed plan must then be developed.

The "Guide to Managing Research Data":

- Provides support for planning the management of research data and preparing the corresponding data management plan;

- Outlines the latest trends and expectations related to research data;

- Includes recommendations and suggestions with the intention of supporting research and researchers.

These recommendations and suggestions can be integrated into research workflows at different rates depending on the scientific field.

Scientific Replication Crisis

A fundamental requirement of modern science, falsifiability, is a crucial component of the reproducibility of scientific measurements and, therefore, their reliability. Today, scientific practice worldwide places great emphasis (for example, through incentive systems) on producing new results. This often sidelines the (re)verification of existing scientific results and the assurance of the reliability of scientific research. In the 2010s, news of the re-examination of the results and circumstances of several well-known scientific experiments made headlines in the global press. This generated widespread doubt about the public perception of science and affected scientific discourse in general. The so-called scientific replication crisis[1] has brought new attention to the planned conditions under which research data are generated, their secure, long-term storage and publication, and the importance of open science initiatives.

- Research Data

-

Research data are factual information created, recorded, accepted, and preserved by the scientific community that support the credibility of research results.

Based on how they are generated, research data may arise from:

- Observation-based methods;

- Experiments;

- Simulation processes;

- The use of existing data sources (through collection, selection, interpretation, and processing).

Classified by their level of processing, research data can be:

- Raw or primary data (e.g., data obtained directly from measurement or collection);

- Processed or secondary data (derived from primary data after being processed by the researcher, e.g., recoded, combined, categorized, or used in calculations).

Based on their format, research data can be:

- Digitally generated data;

- Data not originally digital but later digitized; and

- Data neither digitally generated nor digitized (e.g., handwritten notes, field journals).

Examples of research data:

- Spreadsheets

- Results of measurements, applications, simulations, and data files created from them

- Photographs, films, slides

- Drawings

- Audio and video recordings, and their transcriptions

- Protein or gene sequences

- Data files created from questionnaire survey responses

- Interview recordings and transcripts

- Objects acquired and/or produced during the research process – both digital and non-digital

- Text corpora

Research Documentation

Research documentation refers to the collection of files generated alongside research data, closely related to them, and to be handled and stored together with them.

Examples of research documentation:

- Research plans

- Notes, outlines

- Descriptions of methods and workflows

- Questionnaires, interview guides, codebooks

- Models, algorithms, codes, scripts, software developed for research

- Laboratory notes, journals, memos, protocols

- Research data life cycle

-

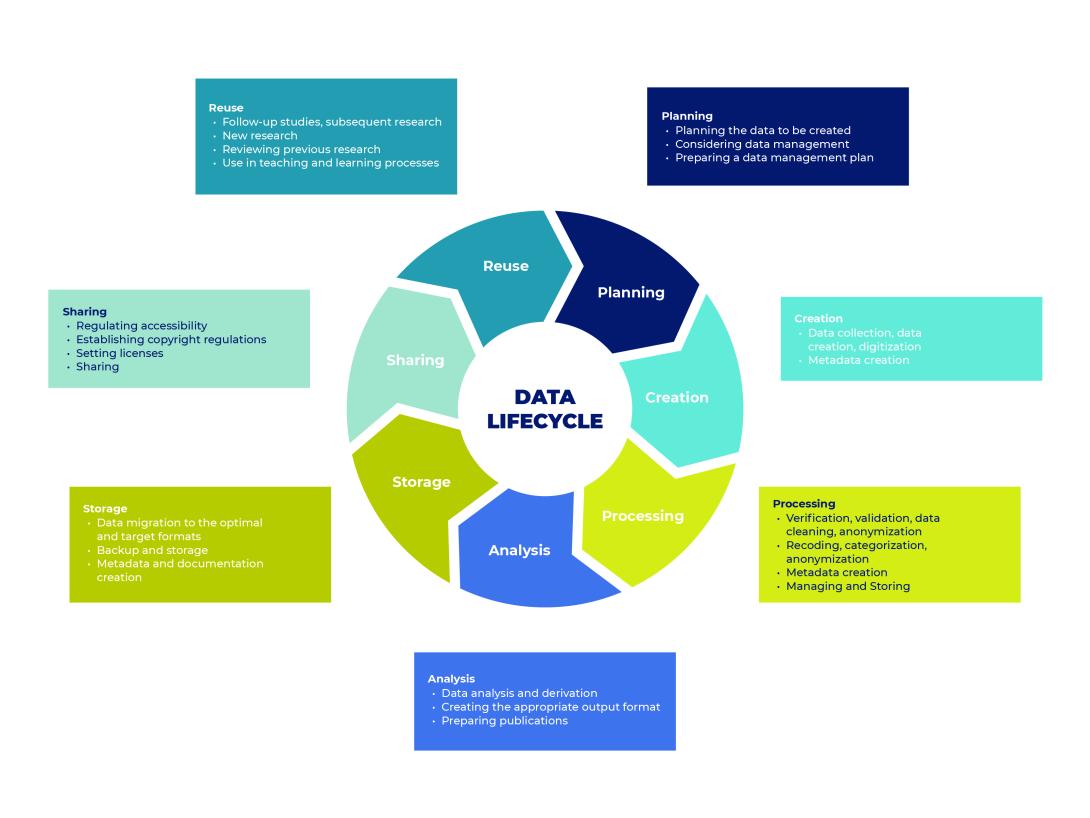

The life cycle of research data can be divided into seven main stages:

- Planning data management

- Data collection and creation

- Data processing

- Data analysis

- Data storage

- Data sharing

- Data reuse

Research data life cycle

Kép

Source: https://rdmkit.elixir-europe.org/data_life_cycle, Hungarian translation and visualizatioby a HUN-REN ARP project - Research Data Management

-

Research data management encompasses all decisions and activities related to research data from the planning phase of a research project to long-term storage. It includes how, where, and under what conditions research data is collected, processed, stored, shared with others, archived (stored long-term), made accessible, and reused. Thoughtful research data management supports and optimizes the research process.

FAIR Research Data Management

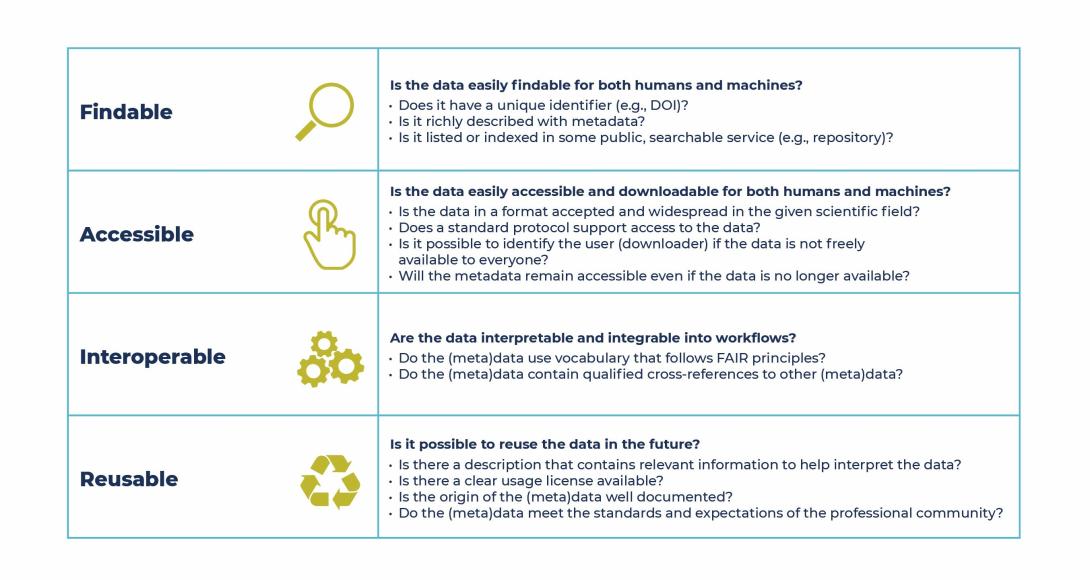

The acronym FAIR stands for Findable, Accessible, Interoperable, and Reusable. These principles were introduced in 2016 by a consortium of researchers and research institutions in the article The FAIR Guiding Principles for scientific data management and stewardship,[1] published in Scientific Data.

The primary goal of the FAIR principles is to support the reuse of scientific data. As the speed, volume, and complexity of data creation in research increase, researchers increasingly rely on machine support in handling data. Therefore, data management must enable computer systems to find, access, work with, and reuse various research data with minimal or no human intervention. According to the original concept, FAIR data management is thus intended primarily to facilitate machine, rather than human (researcher) access to data.

Kép

Source: HUN-REN ARP Project FAIR data management is not the same as Open Data: research data does not necessarily have to be openly accessible to everyone to be considered FAIR. The principles of findability and accessibility mainly apply to metadata describing the research data, as metadata enables data to enter the scientific ecosystem.

[1] Wilkinson, M., Dumontier, M., Aalbersberg, I. et al. The FAIR Guiding Principles for scientific data management and stewardship. Sci Data 3, 160018 (2016). https://doi.org/10.1038/sdata.2016.18

Open Science and Open Data

Open Science is the result of a 50-year evolution in how scientific research is shared. The Open Science movement promotes collaboration, transparency, accessibility, and usability of scientific research.

The rapid development of digital technologies and the explosive growth of the Internet first enabled wide access to scientific outputs (publications, talks), and later the sharing of research data, intermediate results, and accumulated knowledge generated in earlier phases of the research process.

The goal of Open Science is to make the knowledge generated during scientific research accessible as early as possible in the research process, as widely as possible, ideally freely and openly.

One of the movement’s key insights is that open research results and open data can greatly enhance the visibility and overall impact of research.

The Open Science movement encompasses both publications and research data. Its key components are Open Access and Open Data. Open Access refers to any scientific information, data, or knowledge that is digitally, freely, and legally accessible online, allowing unrestricted and lawful reuse. While "Open Access" primarily refers to publications and studies, "Open Data" is used to signal the openness, free and legal accessibility of research data.

Principles of Open Science

- “As open as possible, as closed as necessary”, and

- "Publish earlier and release more”

The first emphasizes a commitment to open access while calling for careful attention to data and information that require protection. The second encourages sharing knowledge as early as possible in the research process.

One of the safest and most effective ways to make research data openly and freely accessible is through Open Access Repositories. These repositories provide free and open storage and download opportunities for researchers to share their research data or find and reuse the data of others.

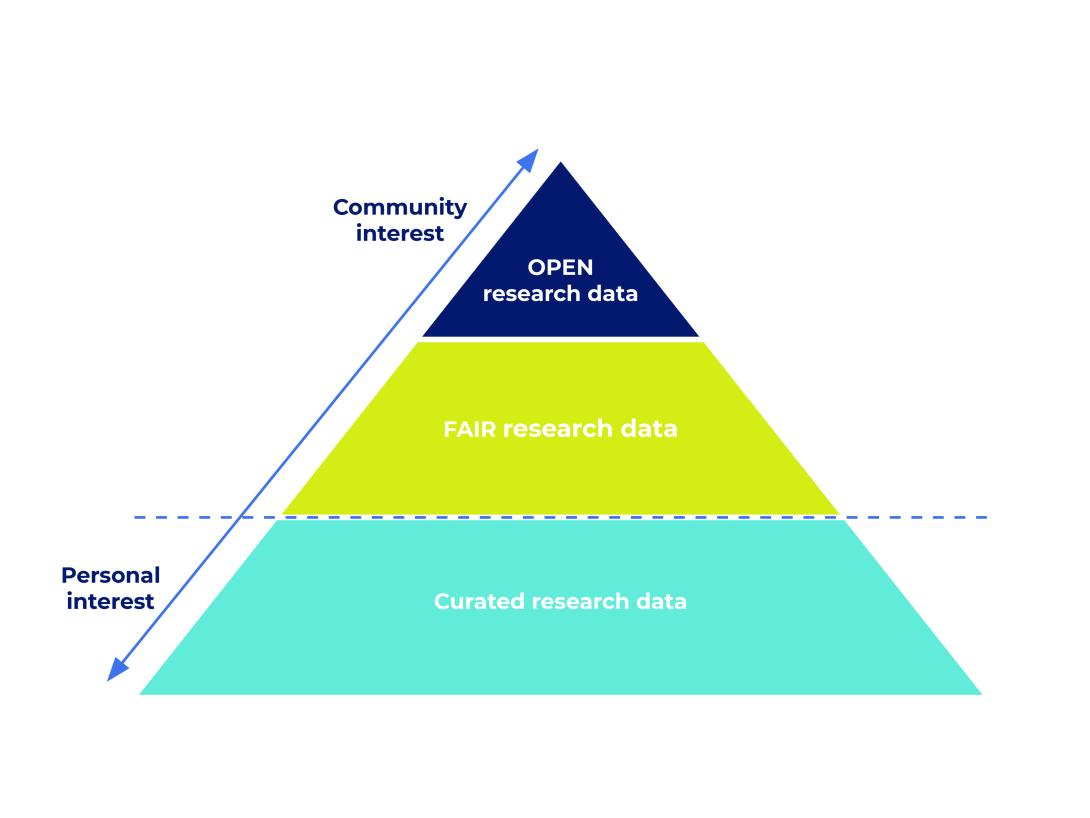

Open Data and FAIR

Open research data and FAIR data do not always overlap. A dataset can be FAIR (for example, its metadata is accessible in a FAIR-compliant way) but not open (the data itself may have restricted access).

One of the goals of the Open Science movement is to bring Open Data and FAIR data closer together by increasing the overlap and expanding the intersection of the two.

The Relationship Between FAIR and Openly Managed Research Data

Kép

Source: https://www.dcc.ac.uk/, https://www.slideshare.net/sjDCC/open-fair-data-and-rdm, Hingarian translation and visualization by HUN-REN ARP Project

- Non-digital research data

-

Not all research data is inherently digital. Many researchers keep handwritten logs or field journals, and some materials or documents that qualify as research data—due to their origin, nature, or the nature of the research—are produced or exist primarily or exclusively in non-digital form. Examples include paper questionnaires, paintings, archaeological finds, minerals, or even tissue derived from living organisms.

Digitization of non-digital research data

In the case of several types of non-digital research data, digitization is possible, offering the following advantages:

- sharing and publishing the data becomes simpler and cheaper;

- digital data management is generally more cost-effective;

- the data becomes more easily accessible and searchable;

- access rights and user groups can be clearly defined, increasing data security;

- materials and documents not preserved digitally are more vulnerable to decay and degradation—digital long-term preservation supports their survival and accessibility in proper quality;

- in the event of natural disasters or even human error, research data, objects, or documents available only in non-digital format can be damaged or destroyed—digitization in such cases serves a crucial preservation role.

Metadata for non-digital research data

Digitizing non-digital research data is generally a resource-intensive process. If a research institution has no or only limited capacity (e.g. due to lack of staff or equipment) for digitization, making the metadata of the research data accessible can be a way to increase their visibility and accessibility.

When providing metadata for non-digital data, it is important to record:

- the exact (physical) storage location of the data;

- the conditions and methods of access;

- access rights;

- and as much other relevant information as possible for potential interested researchers, either in the form of metadata or brief descriptions.

- Metadata

-

Metadata serve to identify research data as completely as possible. In long-term data storage (in repositories), metadata stored together with the research data support the searchability and findability of the data. Metadata can be generated manually, automatically by an algorithm or measuring instrument, or through a combination of these.

Metadata represent the set of facts and information about research data—data about data. The most general metadata include the name of the data, its creator, source, date and method of creation, but metadata also include origin, temporal references, geographic location, access conditions, or terms of use.

The concept of metadata can be easily understood through the following concrete example. Digital cameras automatically generate and store certain information about the digital image—that is, the digital file—at the moment of capture. Such information may include:

- the time the photo was taken;

- the resolution of the image;

- the file type;

- the file size.

These are metadata—specifically, automatically generated metadata, which primarily carry technical information about the digital document.

Modern cameras, when properly configured, also store the exact location where the picture was taken. In addition, users can provide further information themselves; for instance, in the case of a photo, one can specify the names of the objects depicted or the event during which the picture was taken. This creates additional metadata, which no longer provide technical but descriptive information about the digital image. These descriptive data support the future reuse and retrievability of the image.

Metadata can therefore be assigned automatically or manually to any digital file, including digitally available research data. Digitally generated metadata (such as the precise minute or second a photo was taken in the camera example) not only support external users in searching for and validating research data, but also provide researchers with highly accurate and important information regarding certain aspects of their research.

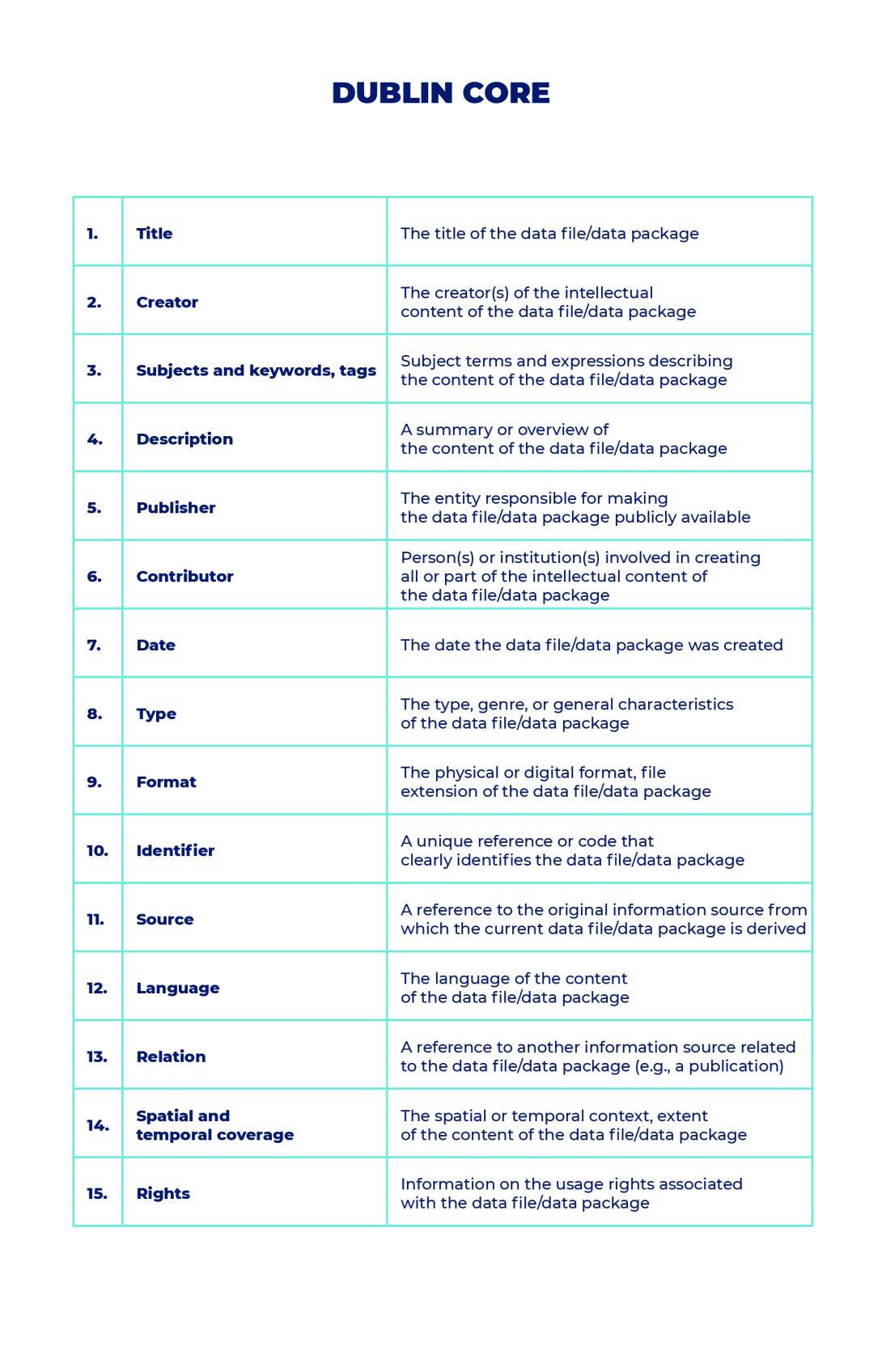

Metadata schema

Both digital documents (e.g., research data) and the digital collections containing them (e.g., data packages) can vary greatly in form and may differ significantly in many aspects and levels. Consequently, the metadata that describe them can also be very diverse, which has made it necessary to develop unified standards for metadata usage—to ensure interoperability and facilitate easier searching. This is how different metadata schemas were created, which greatly contribute to the ease of search and actual discoverability of research data and other digital information sources.

A metadata schema is a defined set of metadata elements (element set) and the associated rules.

One of the most widespread metadata schemas is the Dublin Core. The Dublin Core element set contains descriptive metadata and is general and applicable across all scientific disciplines, making it widely used. It is characterized by simplicity and flexibility; the schema is well-structured and easy to understand.

The name’s first part refers to the workshop location where it was developed in 1995. In Dublin, Ohio, the OCLC/NCSA Metadata Workshop brought together a group of experts with the goal of creating a metadata element set that would be sufficient and appropriate for describing digital information resources. The second part (Core) refers to the idea that the element set is a foundational core that can be expanded.

Its element set has since become an ISO standard; in Hungary, it was issued in 2004 under the title „MSZ ISO 15836 Információ és dokumentáció. A Dublin Core metaadat elemkészlete” (“MSZ ISO 15836 Information and documentation – The Dublin Core metadata element set”).

The 15 core elements of Dublin Core adapted to research data

Kép

Source: HUN-REN ARP Project Different scientific disciplines may use specifically developed metadata standards tailored to the characteristics of the research data typically generated in that field. In the absence of an appropriate standard, individual institutions, projects, or research groups may create their own. When developing a new schema, it is important to ensure that it adheres to the FAIR principles of standardization—that is, it should be consistent and interoperable within the given scientific domain. Currently, several international organizations (EOSC, RDA, GOFAIR) are working on creating frameworks that provide guidance for the development of metadata standards.

Individual repositories often have their own metadata schemas; however, these are generally interoperable. The metadata schema used to describe research data, along with the element set listed within it, is typically suggested by data repositories during the upload process. The repository system attaches the provided metadata to the research data, which helps ensure that the data are properly searchable.

- Data Management Plan (DMP)

-

The written, recorded form of research data management is the Data Management Plan. The Data Management Plan, or DMP, is a brief summary document—typically a few pages long—where the research lead or data steward outlines the research data and how they will be managed, including decisions and activities related to data handling. The goal is to ensure the lawful (according to the defined standards), ethical collection and management of the data, as well as their secure storage during the research and after its conclusion.

Creating a DMP is in the interest of the researcher or research group, but it is increasingly common for funders and grant-awarding organizations to request the first (initial) version of the plan already at the submission of the research proposal. This promotes thoughtful data management, the creation of accessible, sustainable, and reusable data, and the effective application of the principles of knowledge sharing and open science.

Planning research data management and preparing a DMP:

- supports the research itself;

- helps with the conscious management, long-term preservation, and future reuse of research data;

- contributes to the prevention of data loss;

- and, if there are institutional or funding requirements, ensures compliance.

The DMP is a structured document, mostly written in outline form and often arranged in a tabular format. Its depth and detail depend on the researcher’s decisions and/or the requirements of the funder, grant agency, or research institution. It generally includes the following main sections, which can be supplemented with additional relevant data management information:

- Basic information

- Title of the research

- Host/affiliated institution

- Funder of the research

- Duration of the research

- Short description of the research

- Name(s) of participating researcher(s)

- Name of the data steward

- Research data

- Method of data collection or creation

- Type and characteristics of the resulting data

- Method of data processing

- Metadata and metadata schema specification and description

- Data storage and Sharing

- Data storage and protection during the research

- Data sharing, access options, and permissions during the research

- Data storage and protection after the research ends

- Data sharing, access options, and permissions after the research ends

- Scope of data to be destroyed

- Scope of data and documentation to be deposited in repositories

- Scope of research data and documentation to be openly/partially/not accessible after the research

- Location of data access

- Persistent (unique permanent) identifiers

- Ethical and Legal Compliance

- Scope, Protection, and Handling of Personal, Sensitive, and Confidential Data Covered by the GDPR

- Costs and Resources

- Costs related to data management

- Agreements with funders, data providers, or research partners

- Other Field-Specific Information

In some cases, for grants or contracts, the funder or client may provide a DMP template or a detailed guide (e.g., OTKA Data Management Plan, Horizon 2020 Data Management Plan Template). Research institutions may also develop their own templates or guides. In the absence of such resources, the researcher may create the DMP independently, ideally with the help of a data steward or data specialist.

The DMP is a flexible document that may be revised over the course of the research. It is recommended to review it regularly, update it if necessary, and record changes in new versions with version numbers.

Useful links for creating a DMP:

- Zenodo Checklist for Data Management Plan

- Science Europe Guidance Document

- Zenodo Practical Guide to the International Alignment of Research Data Management - Extended Edition

- SND Checklist for Data Management Plan

Online tools for creating a Data Management Plan:

- Data Storage

-

To minimize the risk of data damage or loss, it is important to ensure the secure storage of data generated during each phase of the research. Therefore, research data should always be backed up, preferably in multiple different locations. It is recommended to follow the “here-near-far” principle, meaning one copy should be on the researcher’s own computer, another on a local data storage device (e.g., external hard drive, institutional server), and a third on a remote server (e.g., a repository).

When storing research data, it is essential to prevent unauthorized access, data manipulation, or misuse, paying particular attention to the secure storage of sensitive data, and ensuring compliance with relevant contracts, agreements, declarations, regulations, and ethical standards whenever access is granted.

When selecting the method or tool for data storage, the following factors should be considered:

- How long the data needs or is planned to be stored;

- The volume of the data;

- With whom, how many people, and in what way the data should be shared;

- Whether any sensitive data is generated;

- What resources are available;

- Whether there are any institutional or other requirements related to data storage.

Common solutions for storing research data include:

- Personal local storage solutions (e.g., personal computer, external hard drive)

- Institutional local storage solutions (e.g., institutional computer, institutional server, external hard drive)

- Personal cloud-based storage services (e.g., OneDrive)

- Institutional cloud-based storage services (e.g., institutional cloud)

- Git-type repositories

- Personal website

- Institutional/project website

- Databases

- Data repositories

There are two types of data storage:

- Data storage during research

- Long-term data storage after the research has concluded (archiving)

The method and location of both types of data storage should be carefully planned, weighing the above factors and options. It is possible to store data in repositories during the research, but repositories are primarily intended for long-term storage after the project ends. Currently, data repository storage is the most secure method for long-term preservation of research data.

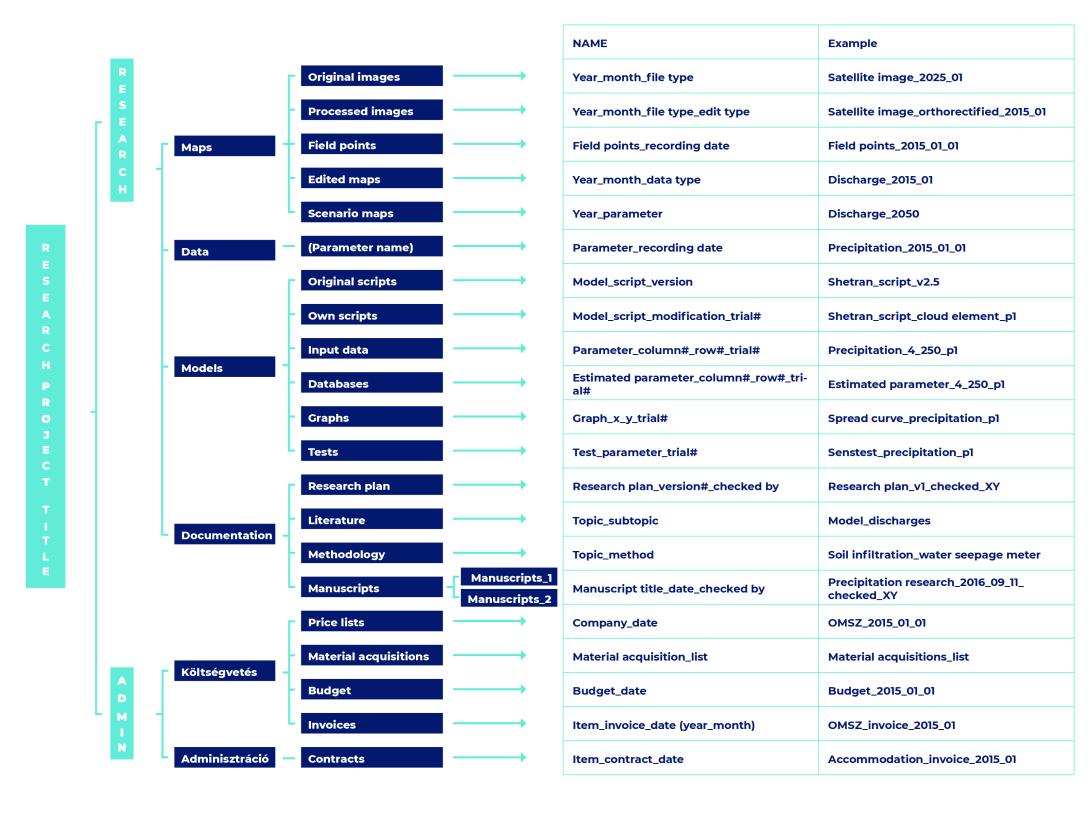

File Structure and File Naming

Folders and data files should be organized and named according to the logic of the given research in a way that makes them understandable to others. All team members working on the project should follow the same file naming rules. The method used to create file and folder structures and naming conventions should be included in the data management plan.

Useful types of information that may be included in file names to help distinguish them:

- Title or acronym of the research/project/experiment

- Location / spatial coordinates

- Researcher’s name or initials

- Date of creation

- Type of data

- Version number

Example of a File Structure and File Naming System

Kép

Source: https://www.wur.nl/en/Value-Creation-Cooperation/Collaborating-with-WUR-1/Organising-files-and-folders.htm / Translation and visualization by HUN-REN ARP Project - Publishing and Sharing Research Data

-

Research data should be made publicly available after the completion of the research (or even during the research period) following the principle of “as open as possible, as closed as necessary.” It is important that publication aligns with institutional, funder, and publisher requirements, the practices of the relevant academic discipline, and the researcher’s own needs, while also adhering to the FAIR principles. The aim is to ensure visibility, accessibility, and long-term preservation.

Possible methods of publication include:

- In a general, subject-specific, or institutional data repository

- As supplementary material alongside a journal article

- In the form of a data paper in a data journal

- In a public database

When publishing research data, it is necessary to consider the declarations, permissions, consents, and contracts previously made, as well as the regulations and recommendations of the selected publication venue.

The nature of the research data must also be taken into account when determining whether and how to share it. Careful consideration is needed in the case of:

- Research data with commercial potential

- Research data under classification or confidentiality restrictions

- Sensitive research data, such as:

- Personal data

- Confidential data (e.g., patient information)

- Data under other types of protection (e.g., environmental protection)

- Third-party data governed by contractual agreements

- Research data that could endanger national or international strategy, autonomy, or security

- Data Repository

-

Research data can be stored long-term in various ways. The most secure location for archiving is a data repository, which is a complex infrastructure designed for the safe and long-term storage, archiving, publication, sharing, and access of digital research data.

Research data published in a repository can be assigned different access permissions. The researcher must ensure that the most appropriate access level is set for their data. Access can be:

- Open – in this case, the research data is freely accessible without restriction, or

- Restricted – in this case, the data is only available to those who request access and are authorized by a designated person (e.g., the data steward or principal investigator of the project).

Why is it beneficial to store research data in a data repository?

The primary goal of depositing data in a repository is to ensure its long-term storage and accessibility.

Benefits of using a data repository:

- Secure archiving

- Safe, long-term storage

- Secure data management

- Accessibility

- Secure (open or restricted) data sharing

- Visibility

- Searchability

- Accessibility (especially important for research funded by public money)

- Data reusability

- Verifiability

- Ensuring research data can be verified

- Reliable version control (documenting the extension, replacement, or modification of data)

- Compliance with grant, institutional, and journal requirements

What should we deposit?

Placing research data in a data repository primarily concerns the research data collected, measured, generated, used, and derived during the course of research. However, research data alone is often not interpretable or not sufficiently interpretable, so it is equally important to deposit the information and sources that supplement, explain, place the data in context, and ensure their discoverability in the repository.

The package to be deposited in the repository should include:

- research data

- research documentation

- research algorithm, software, model (if any)

- readme file

- metadata

To ensure that the research remains comprehensible, transparent, and reusable in the future, creating and attaching a readme file upon repository submission is strongly recommended. The readme file summarizes the context of the research and the most important information (a brief description of the research, background, methodology, content of the data package, file descriptions, etc.) so that the research data and documentation can still be understood and used by users (who were not involved in the research and do not know the researchers) even years or decades later. The readme file may be a structured or continuous text document and may be based on any description of the research (e.g., the research plan, project proposal).

Metadata is generally not provided in a separate file, but is entered through the data repository interface by filling out the fields presented during the data upload process.

Depositing research software

Research software refers to source code files, algorithms, scripts, computational workflows, and executable files created during or specifically for a research project. Software or software components used in research but not created specifically for that research (e.g., operating systems, commercial software, code/scripts/algorithms previously written by others) are not considered research software but rather software used in research.

Proper storage and publication of research software developed specifically for a study is essential for ensuring the research can later be understood and replicated.

Additional benefits of depositing research software may include:

- Citability: published research software can be included in a professional CV, enabling citation

- Contribution to the field: researchers in the field may find the methodology, code, or data useful

- Institutional continuity: in institutions with high staff turnover, making research data, documentation, and software available ensures that future team members can understand and replicate the research

- Helping our future selves: documenting research software and related workflows helps researchers themselves retrace steps. If the software needs to be retrieved after the research concludes (due to slow journal review or revisiting the work), careful preservation and accurate documentation can save significant time and effort.

How to deposit?

To ensure that research materials uploaded to a data repository remain readable and reusable in the future, the following preparatory steps are necessary:

- Select appropriate research data and materials; determine which files belong in a data package: carefully select data, documentation, and other materials that substantiate the results or are otherwise valuable for future use (not all files generated during research must be deposited)

- Anonymization: if the research materials contain personal, sensitive, or confidential data, anonymize or hash the data, protect them appropriately, restrict access, or refrain from depositing such data

- Data cleaning: if needed, clean and prepare data for reuse. For example:

- check that the A1 cell in a spreadsheet is the top-left cell of the table

- avoid embedded tables or charts within spreadsheets

- avoid merged cells in spreadsheets

- avoid color coding

- avoid special characters

- Thoughtful data labeling: use clear labels, descriptions, and explanations for research data in line with disciplinary standards to ensure interpretability. For example:

- use clear column headers in spreadsheets

- preferably use single-row headers

- provide a legend or key for each table

- Use appropriate file formats: if possible, use formats that are standard in the discipline, widely used, open-access, machine-readable, and not easily modifiable. The deposited file formats may differ from those used during the research.

- Use clear filenames: choose understandable, consistent, content-reflecting names for files.

- Develop a well-structured data and file organization: organize files and data clearly, aligned with disciplinary norms and research logic for future clarity

- Upload separate files: deposit tables as separate files, even if they belong together; deposit Excel sheets as individual tables when possible

- Version control: if multiple versions of a research dataset or documentation are deposited, clearly distinguish them to ensure traceability of changes

- Create a readme file: to ensure interpretability and context, a readme file should be included with the data

- Provide detailed metadata: ensure interpretability and searchability using a metadata schema accepted in the discipline and repository

- Define rights, ethics, and licenses: only deposit data and documentation that meet legal and ethical standards; set access levels to ensure compliance

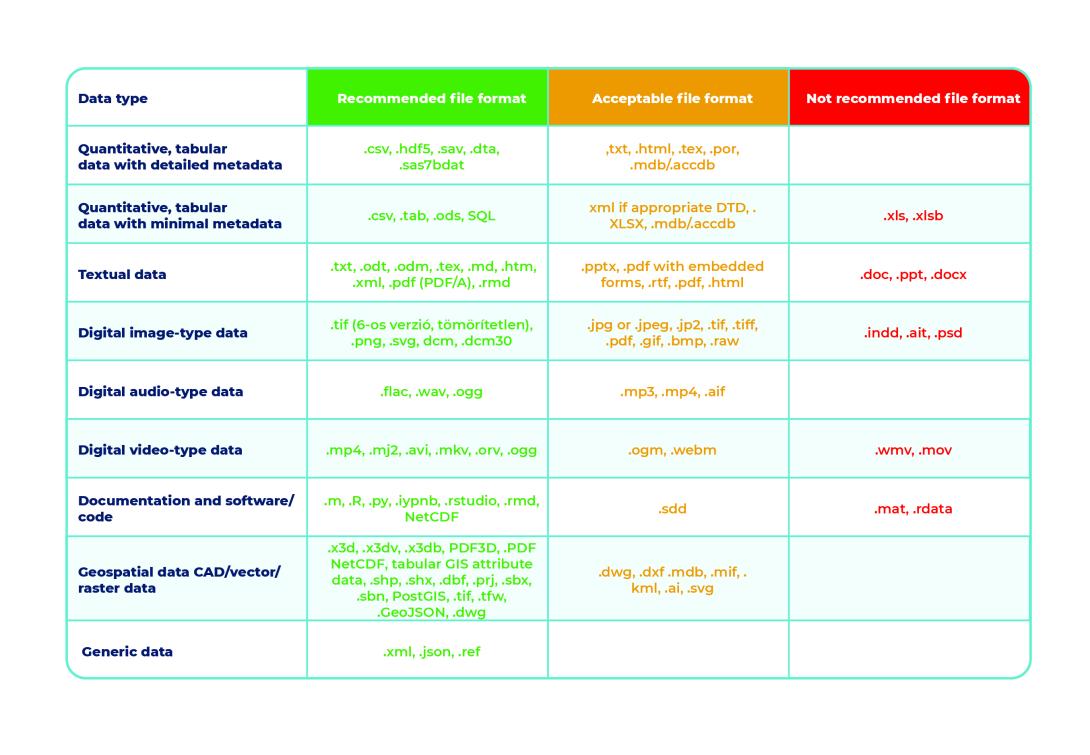

What file formats should we use for depositing?

A key issue in long-term storage is the file format of the research data deposited in the repository. International practice offers various recommendations, but the basic principle is consistent: data should be preserved and shared in formats that can be opened with open-source programs and ensure long-term accessibility.

The file formats used during research and those preserved afterward do not necessarily have to match. Editable formats used during research or files generated by special instruments or algorithms may be worth converting into more durable formats at the end of the project. If possible, consider sharing the data in multiple formats.

Recommended, acceptable and not recommended file formats

Kép

Source: https://openscience.hu/f-a-i-r-kutatasi-adatkezeles/ and https://ukdataservice.ac.uk/learning-hub/research-data-management/format-your-data/recommended-formats/

Where should we deposit?

Choosing the right data repository should consider both general and research-specific criteria.

In 2020, a research group developed general evaluation criteria under the TRUST Principles . The acronym stands for Transparency, Responsibility, User focus, Sustainability, and Technology.

According to the TRUST Principles, a trustworthy repository should:

- Transparency: have clear and publicly accessible service and data management policies

- Responsibility: ensure data authenticity and integrity and the reliability of repository services

- User focus: provide data management aligned with user needs

- Sustainability: guarantee long-term data management and storage

- Technology: provide a secure, reliable, and permanent service infrastructure

Other important general criteria for selecting a repository may include:

- Security: ensure proper protection of data

- PID provision: assign a persistent (unique) identifier (e.g., DOI) to deposited data packages

- Searchability: offer a metadata schema that supports data discoverability

- Openness: repository and metadata should be publicly accessible

- Customizability: allow setting of access rights to data individually

- FAIR: ensure data management complies with FAIR principles

Additional considerations may include:

- Acceptance in the field: meet the requirements of the journal/funder/research institution

- Free access: uploading and usage should be free (with proper affiliation)

- Helpdesk: supported by a responsive and reliable support team

Some cases require a specific data repository for storing research data. For example, if a journal only accepts data linked to its own repository, or if data from a specific instrument can only be stored in a repository operated by the institution running the instrument.

A fundamental principle is to choose a disciplinary or institutional repository when available. If none is available, select a general-purpose repository. It is recommended that data produced in Hungary be uploaded to a Hungarian repository (especially for publicly funded research).

Institutional data repositories at HUN-REN

Currently available data repositories for HUN-REN researchers include:

- the repository of the Research Documentation Center (KDK), and

- the HUN-REN Data Repository Platform (HUN-REN ARP), a developed and expanded version of the former Concorda repository.

The KDK repository provides access to the research data and documentation (interview recordings, transcripts, guides; survey questionnaires, methodology descriptions, databases; field notes, observation protocols, etc.) of studies conducted using qualitative and quantitative methods by the four institutes of the Centre for Social Sciences (TK). Various formats (text, image, video, etc.) are available. Metadata is always publicly accessible.

The HUN-REN ARP Data Repository is an institutional repository maintained by HUN-REN and developed with the involvement of the TK, SZTAKI, and Wigner research institutes. It is accessible to all HUN-REN research sites and supports data storage across all scientific disciplines. The ARP repository is based on Harvard Dataverse but extends it with additional functionalities as part of a multi-component system.

- Unique, Persistent Identifier (PID)

-

A reliable data repository must ensure the findability and identifiability of the digital objects (data, data packages) it holds. A key component of this capability is that persistent identifiers are assigned to the deposited digital objects and are included in their metadata. Persistent identifiers serve the purpose of long-term, global, and unambiguous identification of digital objects. They are typically generated as alphanumeric codes with an associated link. One of the main functions of persistent identifiers is to ensure consistent data access—even if the storage location of the data changes. Therefore, persistent identifiers must be entities independent of the data repository. The most common persistent identifiers include DOI, ARK, Handle, ORCID, and ROR. The first three are used for identifying data and data packages, while the last two identify researchers (authors) and research-related institutions, respectively.

The DOI (Digital Object Identifier) is the most widely used identifier for both scholarly publications and research data. Beyond its widespread use and standardization, its advantages include the existence of central metadata repositories (each DOI agency, such as DataCite and CrossRef, operates a separate database), the presence and expansion of solutions that facilitate data flow (e.g., CrossRef–ORCID integration), and its embedding in scientometrics (e.g., DOI harvesting from articles).

Among standardized identifiers, there are those that can be served by local databases—such as the ARK (Archival Resource Key). Another widely used persistent identifier is Handle, which is a non-commercially developed decentralized identifier system, though it lacks a broader global (or local) metadata repository.

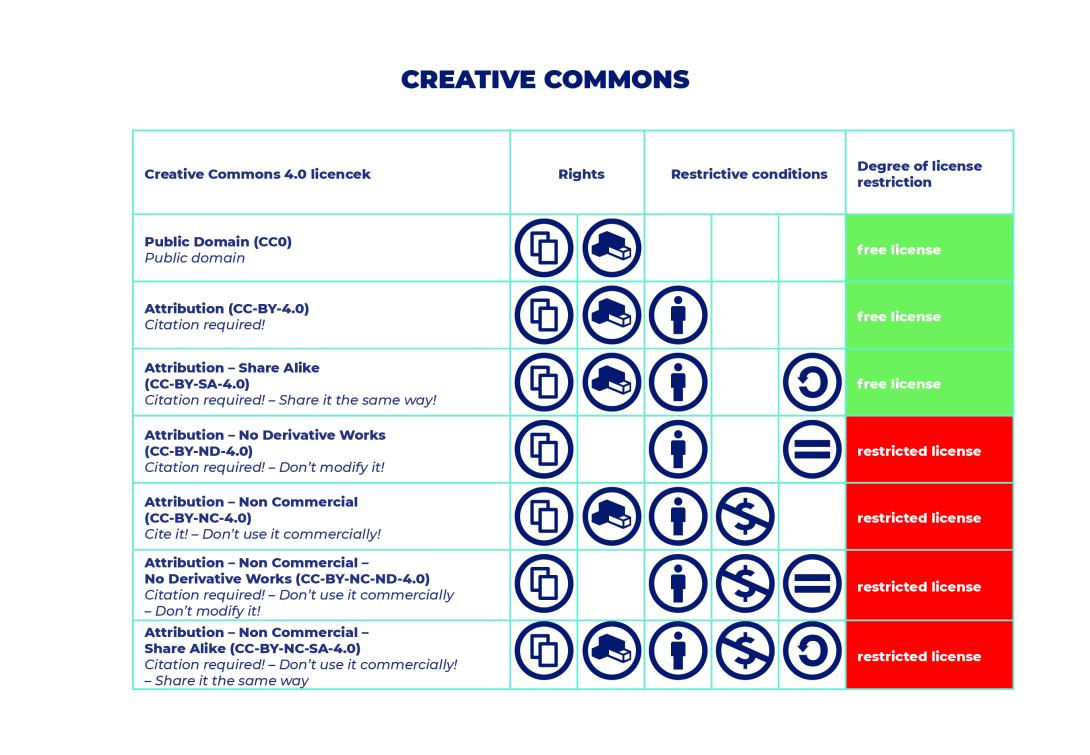

- User Licenses

-

When publishing research data, it is important to decide how others may use the published data and what they are allowed to do with it. Licenses serve to define these terms. The widely used Creative Commons (CC) licenses are also well-suited for research data, as they are well-known, transparent, and machine-readable by search engines.

Licenses can essentially be interpreted as permissions granted under certain conditions.

Before selecting a license for sharing your data, it is worth checking whether the data owner and/or the research funder has imposed any restrictions in this regard—whether related to funding requirements or local institutional policies.

Creative Commons licenses come in seven different types, formed from combinations of two core rights and four restrictive conditions. These conditions are:KépRight to share[2] KépRight to adapt[3] KépAttribution condition (BY)[3] KépNonCommercial condition (NC)[4] KépNoDerivatives condition (ND)[5] KépShareAlike condition (SA)[7] The rights and conditions in detail are as follows:

Right to share: Allows the licensed work to be freely copied, distributed, displayed, and performed.

Right to adapt: Allows the creation of derivative works based on the licensed material.

Attribution condition: Requires that the licensor (the person/entity authorized to issue the license) be credited when the data is used, shared, or otherwise displayed.

NonCommercial condition: Aims to prevent commercial exploitation of the data. This is often used in a dual licensing system, paired with a paid license that allows commercial use.

NoDerivatives condition: Permits copying and distribution of the material but prohibits any modification, adaptation, transformation, or translation. Only the original version may be used and shared. In short: the original material cannot be used to create other materials or adaptations.

ShareAlike condition: Requires that any new works created using the data must be licensed under the same terms as the original data.

Creative Commons licences

Kép

Source: https://hu.wikipedia.org/wiki/Creative_Commons, Trasnlation and visualization by HUN-REN ARP Project https://creativecommons.org/about/cclicenses/ Creative Commons offers a Hungarian-language license chooser interface, that helps determine the most appropriate license for a given research project based on the above decisions.

More detailed guidance (in English) can be found on the Digital Curation Centre website.

[1] To share (right)

[2] To remix (right)

[3] Attribution requirement (BY)

[4] Non-commercial requirement (NC)

[5] No derivative works requirement (ND)

[6] Share alike requirement (SA) - Ethical Considerations and Data Protection

-

Sensitive data includes all personal data, as well as any additional data that can be used to identify individuals, species, objects, or locations, and the disclosure of which carries the risk of discrimination, harm, or unwanted attention.

Personal data is any information (e.g., name, address, ID number, physical, mental, economic, cultural background, political, religious, or philosophical views, health data, or information about sexual life or orientation) that can be used to identify a natural person.

In certain research projects, sensitive data (personal, confidential, secret, or otherwise sensitive information) may be generated. These must be handled with special care when choosing storage methods during research, sharing the data with other researchers or individuals, and designing long-term preservation strategies.

The handling of sensitive, particularly personal data must be described in detail in the data management plan, and appropriate handling and ethical approvals (e.g., from affected individuals) must be obtained.During research, special attention must be paid to ensure that the processing of personal data complies with the provisions of the GDPR.

Ethical and data protection concerns arise in any research involving human subjects. Sensitive data can also arise in other fields—for example, in research examining the habitat of endangered species, involving business interests or confidential information, or in other specialized areas.

All research must be conducted with consideration of research ethics and data protection requirements. If needed, prior to or during the research, the necessary institutional or disciplinary statements and permits related to the research data must be obtained, as well as the consent of participants in cases involving personal data.

The terms set out in these documents, as well as in any other permissions, statements, agreements, and contracts obtained before or during the research, must be observed throughout the entire project. Data must be handled accordingly.When handling sensitive data, the following considerations should be carefully evaluated:

- During research preparation:

- During the planning phase, it must be assessed and stated whether sensitive data will be generated.

- Only essential personal data should be recorded and stored.

- Participants must be informed and provide consent, stating how the research will protect sensitive—especially personal—data at all stages, including sharing and reuse.

- If personal data is to be preserved or made accessible long term after the project ends, written consent must be obtained from all affected participants at the start of the research for storing and/or disclosing this data.

- During the research:

- Extra attention must be given to storing sensitive/personal data, managing access, and determining how and where it will be shared.

- Personal data should only be processed and stored when absolutely necessary, and for the shortest possible time.

- Participation in the research must be voluntary.

- After the research ends:

- If personal data is no longer needed for research purposes, it must be safely and completely deleted.

- If sensitive/personal data cannot be deleted, pseudonymization or anonymization should be used to reduce potential risks.

- According to the GDPR, anonymized data is no longer subject to data protection regulations.

- When depositing research data, extra care must be taken to comply with the repository’s terms of use, especially when the data contains sensitive or personal information.

- In most cases, only data that does not contain sensitive or personally identifiable information may be deposited.

- Exceptions apply if:

- All affected individuals (e.g., funders, research leaders, participants) have provided explicit written consent for storing and disclosing their personal/sensitive data, and the uploader ensures proper access controls.

- All identifiable persons are deceased, and there is no legal restriction on the release of personal data or information.

- Repository access to data containing personal/sensitive information is subject to permission (with appropriate system settings and permissions aligned with the uploader’s intentions).

Artificial Intelligence in Research

The use of artificial intelligence (AI) in research is increasingly common, although the degree varies by discipline. AI-based algorithms and tools are being used more frequently.

If data, analysis, text, papers, or any research material has been created using AI, the applied method and tool must be clearly indicated and cited in a way that is trackable and verifiable. - During research preparation:

- Costs of Research Data Management

-

When planning a research project, it is important to account for the costs associated with managing research data and to plan for their funding, ensuring these are integrated into the overall research budget.

Examples of such costs may include:

- Costs of data storage during the research (e.g., physical or digital storage media, fees for paid cloud services)

- Costs of preparing the data for archiving after the project concludes (e.g., data organization and data cleaning)

- Costs related to anonymization or other procedures necessary for handling personal, sensitive, or confidential data

- Costs of long-term preservation and sharing of research data (e.g., fees for creating and maintaining a research website, repository service fees)

- Costs associated with sharing data according to FAIR principles (e.g., fees for data steward support, persistent identifier costs)

The actual costs incurred may vary significantly depending on the researcher’s host institution and the range of services it provides free of charge. It is advisable during the planning phase to thoroughly investigate the available free or subsidized options and support mechanisms.

The UK Data Service Data management costing tool and checklist can be useful for planning and estimating the costs related to research data management.

- Terms and Definitions Related to Research Dataapcsolatos fogalmak, meghatározások

-

Key terms and definitions related to research data and research data management can be found on the Glossary, subpage of the Data Repository Platform website, as well as in the index of the Framework for Open and Reproducible Research Training website.

- Promoting Knowledge of Repository Use and Research Data Management Consulting in the HUN-REN ARP Project

-

In addition to developing repository infrastructure, a key objective of the HUN-REN Data Repository Platform project is to promote knowledge related to the use of data repositories; to create or support the development of data management policies and regulations at the HUN-REN and institutional levels; and to adopt national and international standards, recommendations, and best practices for data and metadata management and storage. These efforts aim to establish a FAIR data repository culture within the HUN-REN institutional network.

To support this goal:

- The HUN-REN ARP website continuously publishes updated educational content on research data and its management.

- Researchers are kept informed about upcoming events and activities through the website and various communication channels.

- HUN-REN ARP experts regularly deliver lectures and training sessions; recordings of past events are available on the HUN-REN ARP website

- Through the HUN-REN ARP Ambassador Program, the foundation for building an institutional data steward network has been laid, along with the development of institutional best practices.

- HUN-REN ARP staff are available to help with any questions or problems related to this topic.

Researchers within the HUN-REN network can access information from the HUN-REN ARP Portal, and consult with ARP professionals. The ARP team is happy to provide consultations and support at any stage of research regarding data management and repository usage. Inquiries can be sent to: @email